Dolby Atmos

Spatial Mastering is pleased to offer to our clients a premium and professional service of mixing and mastering in Dolby Atmos.

Spatial Mastering is pleased to offer to our clients a premium and professional service of mixing and mastering in Dolby Atmos.

Dolby Atmos file ADM BWF (Audio Definition Model Broadcast Wave Format) 7.1.4 ready for distribution.

Binaural Stereo file and if required at no extra cost 5.1 file and 7.1 file

The ADM BWF file includes headtracking integration that is a must for the Apple Spatial Audio streaming service.

Our service will allow you to have your music streamed in Dolby Atmos on streaming services such as: Apple Music, Tidal, Amazon Music, Vibe, Hungama and Melon.

At Spatial Mastering we have been involved with Spatial Audio for more than a decade and from the beginning with Dolby Atmos.

Why Dolby Atmos and what’s the big difference from Stereo and 3D Audio.

Two main factors make all difference with Dolby Atmos:

OBI (Object Based Immersive) and Head Tracking

Dolby developed the OBI to avoid an unnecessary increase in channel counts. An object, in theory, represents an audio channel. Rather than being limited to a single channel, the object can be created anywhere in 3D space at a specific X, Y, and Z coordinate. The channel and its positional data are encoded as metadata and delivered from the production environment to the Dolby Atmos decoder in the consumer playback environment.

The decoder handles the information to determine where the audio should be positioned in space, employing an algorithm to optimally route the audio to the available output channels that feed the correct speakers in each context.

Object-based audio refers to the practice of applying distinct objects to represent particular sounds in the mix.

Head tracking is an essential component of binaural audio and Dolby Atmos when streamed via Apple Music.

Our brain automatically moves our head slightly to localise sound better. Therefore, head tracking is not just helpful in enhancing the live concert experience but also for spatial localisation in general. Once consumers grasp the full potential of head-tracking and spatial audio, they will realise that it is not only essential when watching movies with a visual reference point, but it may also enhance the music listening experience. Current research and development efforts in this field are intensive.

In daily life, three-dimensional sound experiences are common. Humans’ ability to make sense of their surroundings and interact with them relies greatly on spatial awareness, and hearing plays a crucial role in this process. Natural noises are perceived based on their location and, less consciously, their magnitude; most people rely more strongly on their visual sense for this information

Sound source localisation is paramount for comfort of life, determining the position of a sound source in 3 dimensions: azimuth, height, and distance. It is based on 3 types of cues: 2 binaural (inter-aural time difference and inter-aural level difference) and 1 monaural spectral cue (head-related transfer function)

Dolby Atmos technology provide a virtual and realistic earing experience of the mentioned above.

Mono

Mono audio is single-channel audio, one of the most used formats. Mono sends all audio signals through a single channel for playback.

Stereo

Stereo is two-channel audio. When listening to stereo audio, it is possible to localise audio sources to the left and right but not above, behind or below.

A stereo mix can provide a spectrum of positional information across the left and right speakers. Therefore, where a single speaker emits sounds from a single location, a stereo pair can create the impression of a sound being located anywhere between the two speakers by adjusting the output balance of the speakers. However, the sound remains ‘anchored’ to a line between the speakers. From the listener’s perspective, sounds appear to originate from either speaker position or anywhere along the line between them. This positional illusion can be altered by the arrangement of the listening space, elements within the room and the listener’s closeness to the speakers. Stereo spatialisation becomes less effective as the listener’s distance from the speakers increases

Stereo spatialization outperforms headphones, which also provide a more convincing sense of depth. Even if the listener moves, the speakers remain at a constant distance from the ears. This means that the listener is always in the ‘sweet spot,’ with no factors in the listening space interfering with the effect.

Let’s explore the world of Spatial audio! But hold on! What exactly do you mean by Spatial Audio, you may wonder. Are you referring to Immersive Audio, 3D Sound, Surround Sound, Binaural, Auro-3D, Dolby Atmos, 360-sound, or something else? Yes, to a point. In general, we are discussing anything related to sound other than stereo, which is not a novel concept in and of itself. However, with major platforms like Apple, Facebook and YouTube fully implementing Spatial Audio technology it’s more relevant than ever before and possibly the most relevant event for music fans and immersive audio creators.

Dolby Laboratories introduced Dolby Atmos in 2012, with Disney’s Brave being the first film to be mixed in Dolby Atmos. Dolby Atmos is ten years old and was initially created for film, not music; this explains the difficulties encountered while combining music in Dolby Atmos (Wildsound, 2018). Beginning in the 1900s, sound recordings and reproductions were mono, with a single source from which sound was delivered. In the 1930s, stereophonic recordings used two speakers to produce a one-dimensional sound field. Furthermore, with the development of surround sound, the one-dimensional sound reproduction was stretched to a two-dimensional sound field by installing additional speakers surrounding the listener. Although Ray Dolby is credited with inventing surround sound in the 1970s, there were earlier trials, such as in the Disney film Fantasia in the 1940s .

The following evolutionary stage was the transition from a two-dimensional to a three-dimensional sound field—this is the origin of the complex term 3D audio; in addition to all the speakers surrounding the listener, thus, the name surround sound (like 5.1), there are extra speakers above the listener. This means the listener is immersed in sound coming from all directions, hence the phrase immersive audio

There was a different pace in the evolution of sound reproduction between movies and music. Film sound was very straightforward; the first sound in movies started in the 1920s as mono; then in the 1930s came stereo sound experiments (Rapoport, 2015) and in the late 70s, the early surround sound formats were mainly developed by Dolby.

Over the years, many films, TV episodes, and broadcasts have been made in surround sound. However, there was a discrepancy between the advancements made in the development of sound reproduction for film and music.

Over the years, virtually almost every film and several television programmes and broadcasts have been combined in surround. The transition to immersive sound began in 2011 with the release of Auro-3D, followed by the dominating Dolby Atmos format in 2012 Dolby Atmos was initially created and introduced for cinematic sound. Recorded sound without image (only music recordings) began in the late 1800s, initially in mono, followed by the introduction of stereo in the 1930, which was wildly successful first with vinyl, subsequently cassette tapes, then CDs, and now digital audio files distribution and streaming.

Dolby released Dolby Atmos music in 2019, the first step into immersive sound for commercial music formats, but what happened in the interim? How was the significant surround era in music recording?

It was a considerable gap; music had been largely confined to stereo since the 1930s, at least for commercial music creation. The failure of quadraphonic in the 1970s and the defeat of DVD audio from 2000 to 2007 showed that the mass consumer public was not interested in anything other than stereo when listening to music.

The new terminology is always associated with new technologies. Although the terminology is supposed to make life easier by using particular words with agreed-upon meanings, those terminologies frequently decay. A term might imply different things depending on whom you ask.

Here are some sound reproduction examples: The term ‘immersive audio’ refers to sound systems that replicate a three-dimensional sound field. As a result, the terms 3D audio and 360 audio have the same meaning. Unfortunately, some individuals use the term surround to denote immersive audio, which can be confusing when distinguishing between known two-dimensional sound formats, like quad, 5.1, or 7.1 and immersive three-dimensional sound formats. There are also numerous more terms that are connected to immersive sound, such as Ambisonics, Auro-3D, 360 sound, 360 reality audio and Dolby Atmos.

Finally, binaural audio is essential to the success of immersive audio.

Object-Based describes the primary distinction between Dolby Atmos and Channel-Based stereo or surround formats.

Individual DAW tracks are routed to a stereo output bus, two channels, or a surround bus (more than two channels).

Each track’s panner controls which channel the signal goes on. For instance, (L)Left, (R)Right, (C)Centre, (Ls)Left Side, (Rs)Right Side, (Rs)Right Surround (Rear Right), (Ls)Left Surround (Rear Left), (LFE) Low-Frequency Effects.

The mix is committed to a specific channel count, such as stereo, Quad, 5.1, or 7.1, and we require a device with the same number of speakers to play it back. Object-based systems remove this limitation.

Object-Based technology is accompanied by metadata in the file and describes an exact position of a sound in the audio reproduction environment.

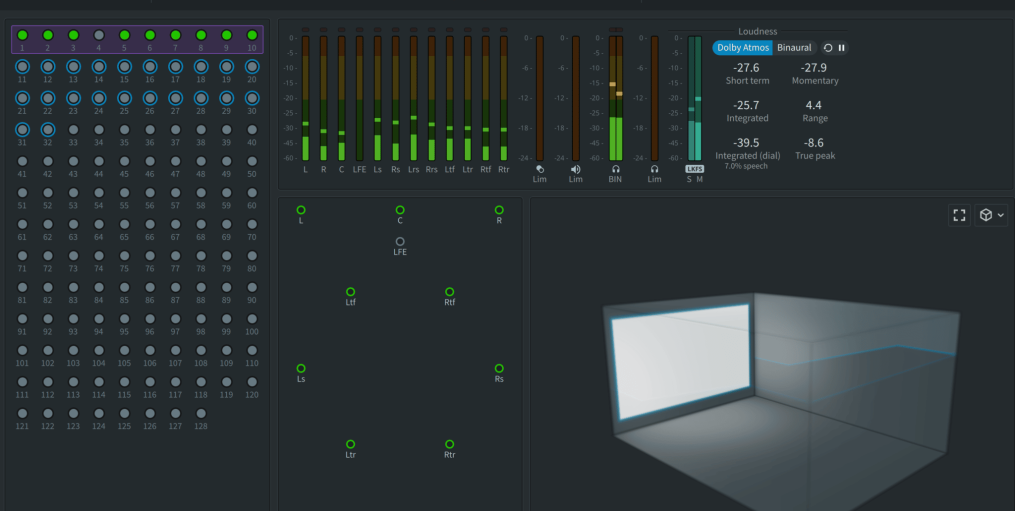

After inserts and level, each track’s output is routed straight without pan controls to one of the 128 Dolby Atmos input channels.

The pan control on each track still works; perhaps, instead of routing the audio signal to the output channels, it now generates metadata like GPS data that describes the X, Y, and Z coordinates of where the audio signal is in 3D space.

This metadata will be provided to the Dolby Atmos renderer alongside the audio signal. This track’s signal is an object.

The first 10 of the Dolby Atmos renderer’s 128 input channels are allocated to a conventional channel-based routing system.

On the daw, we can route a track or multiple tracks to a multi-channel bus, such as 7.1.4, utilising the surround paneer. Those ten audio channels are sent to 10 input channels of the Dolby Atmos renderer with pan information contained; It’s called a bed.

Before we begin, we must prepare the Dolby Atmos renderer and assign the 128 renderer channels or the ones we need to an object or a bed, then map and route the individual daw tracks to those objects or bed channels.

We can say they are definite principles for routing a sound or instrument to a bed or object, but lately, it has become a matter of personal preference for the producer.

Channels 1 through 10 can only be allocated to a bed (2.0 up to 10.1.2 ), whereas the remaining 118 channels can be assigned to objects. We can set any of the remaining 118 channels as extra beds, but then they are no longer available for use as objects. Signals that are spatially fixed or that have been pre-recorded in a surround format should be routed to a bed. Sounds we want to locate at exact spatial locations or move around are mapped to objects more effectively. Only tracks routed to a bed in our DAW can be delivered to the LFE. Channel tracks assigned to objects cannot reach the LFE channel without routing methods

Dolby Atmos Renderer

Dolby Atmos Renderer is the crucial element that differentiates Dolby Atmos from other sound formats with high-speaker channels.

Channel-based formats are set and limited in terms of channel count.

Dolby Atmos is playback agnostic. We create only one Dolby Atmos mix, which can play on systems with any speaker configuration: 7.1.2, 7.1.4, 5.1 stereos, binaural headphones, or any of the Dolby Atmos-compatible smart speakers or sound bars. The idea is simple: up to 128 audio channels and their individual pan information, which means the specific location of those audio signals, are sent into the renderer, which processes that data in real-time to produce a channel-based output. The only information we need to supply the renderer is the channel-based output format we desire, such as 7.1.2, 5.1, binaural headphones, or stereo. Independently of the x, y, or z position a signal has, the renderer attempts to place it on the available speaker in that arrangement. The more speaker channels we have, the more precise the spatial reproduction will be. The fewer channels we have, the less accurate the end result will be. If no height speakers are available, the signals will be routed to the nearest surround speakers. The signals will be incorporated into the stereo mix if no surround speakers are available. The mix we hear at the end may not be immersive or even surround, but we will not lose any signals from the original Dolby Atmos mix.

Dolby export and file format

We select the speaker configuration we will listen to, for instance, 7.1.4, and the renderer processes the (up to)128 input audio signals depending on the pan information to play the Atmos mix over those output channels when the output format is changed. For example, in binaural headphones, the renderer processes the same (up to)128 input channels with the panning information to play the Atmos mix as a two-channel binaural audio signal using the same 128 input channels. Once we complete the Dolby Atmos mix, this will be a significant change. Instead of bouncing the Dolby Atmos mix to a wav file, we record the Atmos mix to a specific Dolby Atmos master file that maintains the separation of the (up to)128 audio signals and the panning information.

To provide the Dolby Atmos master file to the mastering engineer, record label, or aggregator, the Dolby Atmos master file is exported as an ADM BWF file. The file contains up to 128 audio channels, the appropriate pan control metadata, all beds in a single stem, and all objects in separate stems.

The Atmos playback device has a renderer similar to the Dolby Atmos renderer application. The consumer receives the Dolby Atmos mix as an encoded file that maintains the separation of audio and pan information despite data compression. It calculates the Dolby Atmos mix according to the speaker arrangement of the end user’s playback system.

Binaural interaction procedures essentially evaluate how the auditory system processes the interaural time or intensity difference of one auditory event presented to both ears. Binaural interaction tasks require processing the complementary information coming from the two ears following the presentation of one acoustic signal presented simultaneously or sequentially. This is an important auditory ability to, for example, locate sound in the environment (Jutras et al., 2020).

Because our brain compares the sound entering the left and right ear and analyses the difference in level, timing, and frequency created by our head and ear anatomy, we can hear sounds in three dimensions all around us (Konishi, 2006). We may simulate binaural hearing by placing two small microphones in our ears and listening to the recordings through headphones.

Binaural recordings are frequently created with a dummy head (countless examples are available online). However, it is also easy to reproduce binaural recordings using the right software and current technology.

We only need two audio channels to play it back over headphones, and we can create the illusion of music playing around us, as opposed to inside our heads or in only one ear, as with stereo headphones.

Two-channel stereo signals are listened to via stereo headphones. A binaural headset likewise plays back two channels, but a specific binaural signal creates a 3D listening experience.

We can produce binaural audio artificially by processing a signal and placing it anywhere in three-dimensional space. When played through headphones, the sound will appear to emanate from the 3D location where the signal was placed. This is referred to as binaural rendering.

HRTF is a sophisticated mathematical formula used in binaural rendering to simulate the geometry of the head and ears. Because these shapes are unique to everyone, the binaural renderer in most applications employs an average form, with the caveat that the more significant the difference between our own shape and the average shape, the less convincing the 3D experience will seem. Therefore, there are differing opinions regarding how convincing binaural audio sounds are .

The solution is to utilise our own measurements for binaural processing, often known as individualised HRTF (a complex procedure performed in an anechoic room). This technology element is still being developed with large sums of money and effort from many companies. This means Dolby Atmos’ playback will improve significantly over the next few years as it becomes simpler to produce personalized HRTFs and import them into our playback device.

ADM BWF File – this is your for Dolby Atmos Distribution

Stereo version of the mix wav format – Mastered

When required 5.1 and 7.1 wav files ( no extra cost)

Once you have your package built and you are ready to place your order. Select the amount of songs you wish to have mastered and click add to basket. Proceed through the checkout system and pay for your mastering using PayPal or Worldpay. Once checkout is complete you can upload your files via the upload page here.